This comprehensive guide helps Excel users transition to Pandas—Python’s data powerhouse. Learn how to clean, analyze, and visualize data more efficiently than ever before. From setup to advanced manipulation, this post shows how to move beyond spreadsheet limits and automate repetitive tasks seamlessly.

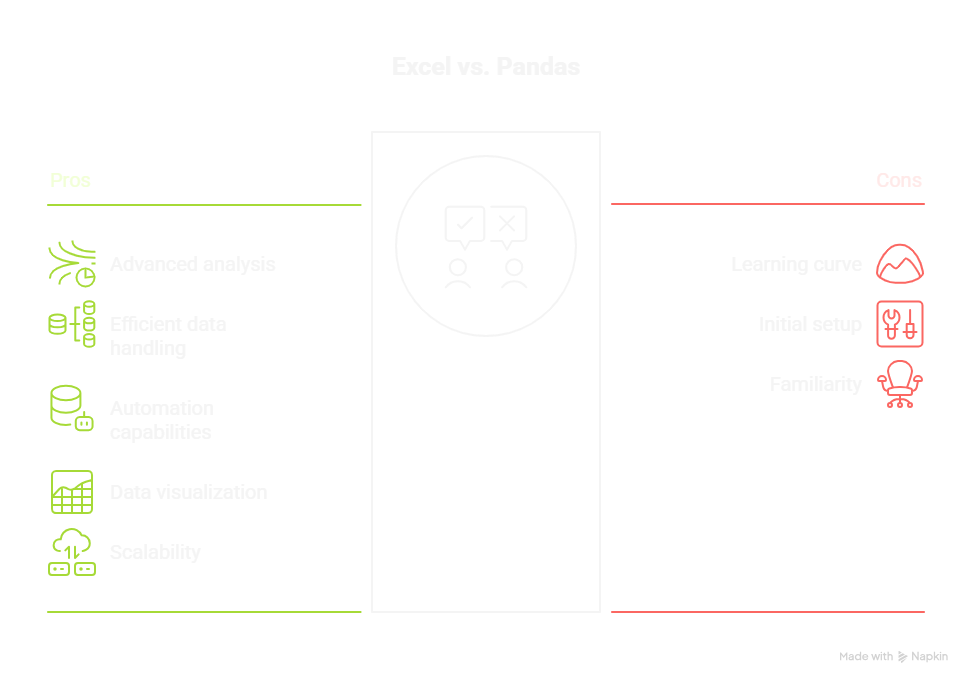

While Excel is the go-to tool for data analysis, this guide to Pandas for Excel users is for those hitting spreadsheet limitations. As datasets grow larger, many are discovering the need for a new tool. Enter Pandas, a powerful Python library that unlocks the full potential of your data. This comprehensive guide is tailor-made for Excel users ready to enhance their analytical skills and streamline their workflows.

By bridging the gap between familiar spreadsheet functions and advanced data manipulation techniques, you’ll learn how to leverage Pandas to perform tasks with unmatched efficiency. From data cleaning and transformation to in-depth analysis, unlocking data power has never been easier. Get ready to elevate your data game and transform how you interact with information!

Understanding the Basics of Pandas

Pandas is a powerful and versatile data manipulation library for Python that has revolutionized the way data analysts and scientists interact with data. This open-source library provides high-performance, easy-to-use data structures and data analysis tools. These tools are designed to handle structured data effectively and efficiently, making operations like data cleaning, manipulation, and analysis seamless.

For Excel users, Pandas offers a way to overcome the limitations of traditional spreadsheets, especially when dealing with large datasets and complex operations. Many professionals face these common challenges with monthly Excel reports that Python can easily automate.

At its core, Pandas leverages two primary data structures: Series (one-dimensional) and DataFrame (two-dimensional). These structures are optimized for performance and built to handle a wide variety of data types. Series is akin to a single column in an Excel spreadsheet, while a DataFrame is comparable to the entire spreadsheet.

The integration of these structures allows for more complex and sophisticated data manipulation techniques compared to what Excel offers. Additionally, Pandas provides a rich set of functions to perform operations like merging, reshaping, and aggregating data.

Understanding the basics of Pandas is crucial for transitioning from Excel to this powerful library. Familiarity with Python and its syntax will be beneficial, but even those new to programming can quickly grasp Pandas’ functionalities due to its intuitive design. Learning how to navigate and utilize these data structures will unlock new possibilities for data manipulation and analysis, making Pandas an invaluable tool for anyone looking to elevate their data handling capabilities.

Setting Up Your Environment: Installing Pandas

Before diving into the world of Pandas, you need to set up your environment. The first step is to install Python, which serves as the foundation for running Pandas. Python can be downloaded from its official website, and the installation process is straightforward. Once Python is installed, you can use package managers like pip or conda to install Pandas. Pip, which comes with Python, is the most commonly used package manager. You can install Pandas by running the command pip install pandas in your terminal or command prompt.

Another popular option for setting up your environment is using Anaconda, a distribution that includes Python and a host of useful data science libraries, including Pandas. Anaconda simplifies the process by providing a graphical user interface for managing packages and environments. After installing Anaconda, you can create a new environment and install Pandas by navigating through Anaconda’s user interface or using conda commands in the terminal. This method is particularly useful for users who prefer a more visual approach to managing their data science toolkit.

Once Pandas is installed, it’s crucial to familiarize yourself with a development environment where you can write and execute Python code. Jupyter Notebooks, which come bundled with Anaconda, are highly recommended for beginners and experienced users alike. They provide an interactive web-based interface that allows you to write, execute, and visualize code in a sequential manner. This interactivity is beneficial for experimenting with Pandas functions and observing their outputs in real-time. Setting up your environment correctly will ensure a smooth transition from Excel to Pandas, enabling you to fully harness the library’s capabilities.

Key Differences Between Pandas and Excel

One of the most significant differences between Pandas and Excel is the way they handle data. Excel is primarily a spreadsheet software that organizes data into cells, rows, and columns, allowing users to perform a variety of basic operations like sorting, filtering, and calculating formulas. However, its capacity to handle large datasets and perform complex data manipulations is limited. Pandas, on the other hand, is designed for data manipulation and analysis, providing robust tools to handle large volumes of data efficiently.

Another key difference lies in the flexibility and scalability of operations. While Excel relies on manual inputs and GUI-based interactions, Pandas leverages Python programming, which allows for automation and reproducibility. This means that tasks that would require repetitive manual steps in Excel can be automated with a few lines of code in Pandas. Additionally, Pandas can handle operations on much larger datasets, making it suitable for big data analysis where Excel would struggle due to memory and performance constraints.

Furthermore, the range of functions and operations available in Pandas is considerably more extensive than in Excel. Pandas offers advanced data manipulation techniques like merging, reshaping, and pivoting, which are either cumbersome or impossible to perform in Excel. Moreover, Pandas integrates seamlessly with other data science libraries such as NumPy, Matplotlib, and SciPy, providing a comprehensive ecosystem for data analysis. Understanding these key differences will highlight the advantages of Pandas and illustrate why it is a preferred tool for complex data tasks.

Data Structures in Pandas: Series and DataFrames

Pandas introduces two fundamental data structures: Series and DataFrames. A Series is a one-dimensional array-like structure that can hold data of any type, such as integers, floats, and strings. Each element in a Series has an associated index, which can be automatically generated or specified by the user. This index is akin to the row labels in an Excel spreadsheet. Series are particularly useful for handling time series data or any data that needs to be indexed and labeled.

DataFrames, on the other hand, are two-dimensional structures that resemble an Excel spreadsheet or a SQL table. A DataFrame consists of rows and columns, where each column can hold data of different types. This flexibility allows for complex data manipulations and operations. DataFrames are created from various sources, including CSV files, Excel files, SQL databases, and even other Pandas objects like Series. The ability to handle heterogeneous data types and perform operations across rows and columns makes DataFrames an indispensable tool for data analysis.

Understanding how to work with Series and DataFrames is essential for leveraging the power of Pandas. These structures come with a rich set of methods and attributes that facilitate data manipulation tasks such as filtering, sorting, and grouping. For example, you can easily filter rows in a DataFrame based on specific conditions or sort data by one or more columns. The intuitive design and powerful functionalities of Series and DataFrames make them superior to traditional Excel structures, enabling users to perform more sophisticated data operations with ease.

Importing and Exporting Data with Pandas

One of the first steps in any data analysis workflow is importing data from various sources. Pandas provides a variety of functions to read data from different formats, including CSV, Excel, JSON, SQL, and more. The read_csv function is commonly used to import data from CSV files, while read_excel is used for Excel files. These functions are highly customizable, allowing users to specify parameters such as delimiter, header, and index column.

Importing data into a Pandas DataFrame is a straightforward process, and the flexibility of these functions ensures that users can handle a wide range of data formats. You’ll quickly see why Excel fails at text data and how Python automates it perfectly.

Exporting data is equally important, and Pandas makes this task easy with functions like to_csv and to_excel. These functions allow users to save DataFrames to different file formats, ensuring that the processed data can be shared or used in other applications. For instance, after performing data cleaning and analysis, you might want to save the results to an Excel file for reporting purposes. The to_excel function lets you specify the sheet name, index, and other parameters to customize the output. This seamless integration with various file formats makes Pandas a versatile tool for data handling.

In addition to CSV and Excel formats, Pandas supports reading and writing data from SQL databases. The read_sql function allows users to execute SQL queries and load the results into a DataFrame, while to_sql enables saving DataFrames to a database table. This functionality is particularly useful for users who work with relational databases and need to integrate SQL queries into their data analysis workflow. The ability to import and export data across different formats and systems underscores the versatility of Pandas, making it an essential tool for comprehensive data management.

Data Manipulation Techniques: Filtering, Sorting, and Grouping

Effective data manipulation is at the heart of any data analysis process, and Pandas excels in this area with its powerful and flexible functions. Filtering data is one of the most common tasks, and Pandas makes it easy to select rows based on specific conditions. For example, you can filter rows in a DataFrame where the values in a particular column meet a certain criterion. This is achieved using boolean indexing, where conditions are applied to columns and the resulting boolean series is used to index the DataFrame. Filtering allows users to focus on relevant subsets of data, facilitating more targeted analysis.

Sorting data is another essential manipulation technique, and Pandas provides the sort_values function to sort DataFrames by one or more columns. You can specify the sort order (ascending or descending) and handle missing values according to your requirements. Sorting helps in organizing data and identifying trends or patterns, making it easier to interpret and analyze. The ability to sort data efficiently is crucial for tasks like ranking, ordering, and prioritizing information, and Pandas offers the tools to perform these operations with ease.

Grouping data is a powerful technique for aggregating and summarizing information based on categorical variables. Instead of repetitive pivots, let Python refresh your pivot tables automatically and save hours.

Pandas provides the groupby function to group data by one or more columns and apply various aggregation functions like sum, mean, count, and more. This is similar to Excel’s pivot tables but offers much greater flexibility and scalability. Grouping allows users to gain insights into data by observing how different categories influence the values of other variables. The combination of filtering, sorting, and grouping functions in Pandas empowers users to perform sophisticated data manipulations that go beyond the capabilities of traditional spreadsheets.

Performing Data Analysis with Pandas

Data analysis is where Pandas truly shines, offering a wide range of tools and functions to extract insights from data. Descriptive statistics are fundamental to understanding the basic properties of your data, and Pandas provides built-in functions like describe, mean, median, std, and more. These functions allow users to quickly compute summary statistics for numerical columns, providing a snapshot of the data distribution and central tendencies. Descriptive statistics are the first step in any data analysis process, helping users to identify patterns and anomalies in the data.

For more complex analysis, Pandas integrates seamlessly with other Python libraries like NumPy and SciPy. NumPy provides advanced mathematical functions and array operations, while SciPy offers additional statistical and computational tools. Combining Pandas with these libraries enables users to perform sophisticated analyses, such as regression, hypothesis testing, and clustering. This integration extends the analytical capabilities of Pandas, making it possible to tackle a wide range of data science tasks within a cohesive ecosystem.

Time series analysis is another area where Pandas excels, offering functions to handle date and time data effectively. The datetime module in Pandas allows users to convert strings to datetime objects, perform arithmetic operations on dates, and resample time series data. Time series analysis is crucial for applications like forecasting, trend analysis, and anomaly detection. Pandas’ ability to manage and analyze time series data makes it a valuable tool for users dealing with temporal datasets, providing the means to uncover insights and make informed decisions based on time-based patterns.

Visualizing Data with Pandas: A Quick Overview

Visualization is a powerful way to interpret and communicate data, and Pandas integrates well with libraries like Matplotlib and Seaborn to create compelling visualizations. While Pandas itself offers basic plotting capabilities through the plot method, leveraging Matplotlib and Seaborn allows for more sophisticated and customizable charts. These libraries provide a wide range of plotting functions, including line charts, bar charts, histograms, scatter plots, and more. Visualization helps in identifying trends, comparing categories, and presenting data in an easy-to-understand format.

Creating visualizations with Pandas is straightforward, as DataFrames can be directly passed to plotting functions from Matplotlib and Seaborn. For instance, you can create a line chart by calling the plot method on a DataFrame, specifying the x and y axes. Customization options like labels, titles, and colors can be added to enhance the chart’s readability. Using Seaborn, you can create more complex plots like pair plots, heatmaps, and violin plots, which are useful for exploring relationships between variables and distributions.

Effective visualization not only aids in data exploration but also in communicating findings to stakeholders. Well-designed charts and plots can convey complex information in a visually appealing and understandable manner, making it easier to share insights and drive decision-making. For more dynamic visuals, learn how to build Python visuals that go beyond basic Excel charts.

Pandas’ integration with visualization libraries ensures that users can create high-quality visualizations as part of their data analysis workflow. By mastering the art of visualization, Excel users can elevate their data presentations and unlock new ways to interpret and communicate their findings.

Conclusion and Next Steps for Excel Users Transitioning to Pandas

Transitioning from Excel to Pandas is a transformative journey that opens up new possibilities for data manipulation and analysis. By understanding the basics of Pandas, setting up the environment, and recognizing the key differences between Pandas and Excel, users can start leveraging the powerful data structures and functions offered by Pandas. Importing and exporting data, performing data manipulation, and conducting analysis are seamless with Pandas, providing a robust framework for handling complex datasets efficiently.

This transition requires a shift in mindset, and this guide to Pandas for Excel users aims to move you from manual, GUI-based operations to automated, code-driven processes. This shift not only enhances efficiency but also introduces reproducibility and scalability to data workflows. Learning to write and execute Python code is an essential part of this transition, and resources like online tutorials, documentation, and community forums can provide valuable support. Practicing with real-world datasets and experimenting with Pandas functions will build confidence and proficiency in using the library.

The journey doesn’t end with mastering Pandas; it is just the beginning of exploring the broader ecosystem of data science tools available in Python. Integrating Pandas with libraries like NumPy, SciPy, Matplotlib, and Seaborn will further enhance analytical capabilities and visualization techniques. By continuing to learn and expand their skillset, Excel users can unlock the full potential of data power, transforming how they interact with information and driving more informed and impactful decisions.